This is an old revision of the document!

Table of Contents

Cross-lingual Ontology Alignment using EuroWordNet and Wikipedia

Gosse Bouma

Cross-lingual Ontology Alignment using EuroWordNet and Wikipedia

The International Conference on Language Resources and Evaluation (LREC) 2010

Introduction

The paper is about cross-lingual ontology alignment - to map thesauri in different languages and to map resources that are large, rich in semantics but weak in formal structure.

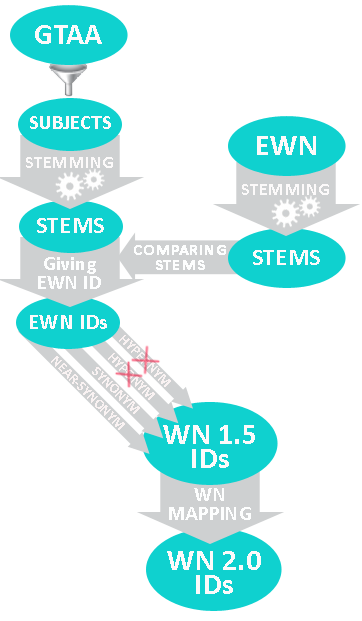

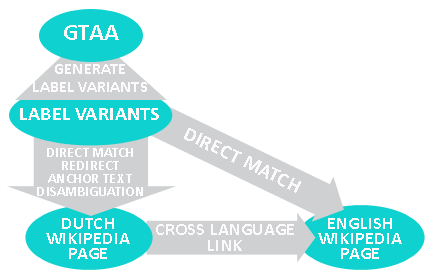

The author describes a system for linking the thesaurus of the Netherlands Institute for Sound and Vision (GTAA) to English WordNet and DBpedia. He describes both approaches his group used for the alignment tasks: GTTA - English WordNet (illustrated on the left image) and GTTA-English dbpedia (illustrated on the right image). For each alignment the evaluation is presented and compared with another system's results, which was also taking on the challenge of the Very Large Cross-Lingual Recourses (VLCR) task of the Ontology Alignment Evaluation Initiative (OAEI) workshop 2009.

Comments

- Ontology Alignment is the process of determining correspondences between concepts of given Ontologies.

- WordNet is a lexical database for the English language. It groups English words into sets of synonyms called synsets, provides short, general definitions, and records the various semantic relations between these synonym sets. The purpose is twofold: to produce a combination of dictionary and thesaurus that is more intuitively usable, and to support automatic text analysis and artificial intelligence applications.

- EuroWordNet is a system of semantic networks for European languages, based on Wordnet. Each language develops its own wordnet but they are interconnected with interlingual links stored in the Interlingual Index (ILI).

- DBpedia is a project aiming to extract structured information from the Wikipedia and then to make this structured information available on the World Wide Web. DBpedia allows users to query relationships and properties associated with Wikipedia resources, including links to other related datasets. As of April 2010, the DBpedia dataset describes more than 3.4 million things, out of which 1.5 million are classified in a consistent Ontology, including 312,000 persons, 413,000 places, 94,000 music albums, 49,000 films, 15,000 video games, 140,000 organizations, 146,000 species and 4,600 diseases.

- In the paper, there is no details how they are calculating evaluation. We suppose that they are using following widely used method: To evaluate the resulting alignment A we compare it to a reference/sample alignment (R) based on some criterion. The usual approach for evaluating the returned alignments is to consider them as sets of correspondences (pairs) and to apply precision and recall originating from information retrieval: <latex>P = |R ∩ A| / |A|</latex> <latex>R = |R ∩ A| /|R|</latex> where <latex> P </latex> - Precision, <latex> R </latex>- recall; <latex>|R ∩ A|</latex> - The number of true positives; <latex>|A|</latex> - The number of the retrieved correspondences; <latex>|R|</latex> - The number of expected correspondences.

- The task Very Large Cross-lingual Resources(VLCR) on the Ontology Alignment Evaluation Initiative (OAEI) workshop and about its evaluation.

Interesting thing - 67% of the terms from the GTAA (Duch linguistic resource) can be mapped to the Duch WordNet. It is much more, than the coverage of Czech WordNet over the terms from PDT (Prague Dependency Treebank). -MK-

What do we like about the paper

- Approaches are described and visualized clearly.

- Overcome/existing problems are described clearly with their examples.

What do we dislike about the paper

- There is not enough information about the sample of results used during the evaluation.

- We cannot find precisely according to which formulas were precision and recall computed.

- Some missing numbers in the tables are confusing.

written by Lasha Abzianidze